Daily Backup Script & Cronjob

ABC currently suffers from a huge bottleneck - each day, interns must painstakingly access encrypted password files on core servers, and backup those that were updated within the last 24-hours. This introduces human error, lowers security, and takes an unreasonable amount of work.

Create a script called backup.sh which runs daily and automatically backs up any encrypted password files that have been updated in the past 24 hours.

Write Script

The steps will be detailed here according to the order I proceeded:

Create I/O Directories

Target Directory : is the source directory we want to scan and backup daily

Destination Directory: is the archive directory we want to backup the source directory to

- Both directories are in the same drive along with the script so the paths are relative

Set Time Var

- Set current time:

currentTSin seconds - We’ll use this variable to label the file name with the timestamp so we know when the backup occured

- Sine we are going to be backing up the files that were updated in the last 24 hours, we’ll use this variable for that calculation as well. I will cover it later on in the process

Define Output File

- A backup is to be created every 24 hours for any files that were updated in the past 24 hours

- Let’s create a var

backupFileName ="backup-<include the time stamp>.tar.gz"

# Let's set the first argument to targetDirectory and the second to destinationDirectory

# [TASK 1]

targetDirectory=$1

destinationDirectory=$2

# verify

$ echo "The source/target directory is $sourceDirectory"

$ echo "The sestination directory is $destinationDirectory"

# set time in seconds

currentTS=$(date +%s)

$ echo $currentTS

currentTS=1727368127

# Task 4

# create the file name containing the timestamp. File will be archived and compressed .gz

backupFileName="backup-[$currentTS].tar.gz"Set Path to I/O Directories

- Store the path to the current directory in origAbsPath

- Go to the Destination Directory and store its path in destAbsPath

- Then go back to the current directory that’s saved in origAbsPath from above

- From there go to the Source/Target Directory where the files to be backed up are

# Go into the sourceDirectory, create the backup file, move backup file to destinationDirectory

$ pwd

/home/project

# set path to pwd

origAbsPath=$(pwd)

# go to the destinationDirectory

$ cd $destinationDirectory

$ pwd

/home/project/archive

# [TASK 5] Store the absolute path of the current directory

origAbsPath=$(pwd)

# [TASK 6] Go to the destination directory and get its absolute path

cd $destinationDirectory

destAbsPath=$(pwd)

# [Task 7] change from current to the abs working directory then to the target/source directory

cd $origAbsPath

cd $targetDirectorySet Time Var

- If you remember earlier we created the

currentTSin seconds - Here we’ll use it to see if any of the files have an “last modified date” that meet the 24 hour condition

- 24 hours is obviously: 24*60*60 in seconds

- create the time differential var:

yesterdayTS

# Task 8 Find the files that have been updated within the past 24 hours. Best way to do that is to look at each files last modified date was 24 hours or less

# so let's define a variable to be dynamic to 24 hours from the time this script is meant to run currentTS

# let's calculate the number of seconds in 24 hours since our currentTS is in seconds: 24*60*60

yesterdayTS=$((currentTS - 24 * 60 * 60))Create an Array

- Another way to declare an array is to use:

declare -a <arrayName> - To append values to an array you use:

arrayName+=($variable) - When you print an array it will list all its values separated by

space - So we’ll create an array

toBackupto store the list of files that meet the 24 hour “last modified time” condition. - Those are the files we’ll need to backup

- In order to get the list we need to loop through the list of files in the directory and check if their dates meet the condition

- Next we’ll create the loop and check the

date -r

Extract Modified Date

- Task 10: Inside the for loop, you want to check whether the

$filewas modified within the last 24 hours. - To get the last-modified date of a file in seconds, use

date -r $file +%swheredate -rgives us the last time a file was modified, - Then compare the value to

yesterdayTS- Idea:

if [[ $file_last_modified_date > $yesterdayTS ]]then the file was updated within the last 24 hours!

- Idea:

- Task 11: If the file was updated, then will add the

$fileto the array we created toBackup

# declare a new array toBackup

$ declare -a arrayName

$ arrayName+=("Linux")

$ arrayName+=("is")

$ arrayName+=("here")

$ echo ${arrayName[@]}

Linux is here

# create an array

declare -a toBackup

# Task 9, set the in ($) in the for loop, the loop will go through all the files and directories in the current folder, since we are already in the source/target directory from Task 7 all we do is ls the directory for a list

for file in $(ls)

do #[Task 10]

if [[ $(date -r "$file" +%s) > $yesterdayTS ]]

then

# [Task 11]

toBackup+=("$file")Compress & Archive

- Task 12: After the

forloop, compress and archive the files, using the$toBackuparray of filenames, to the file with the name assigned to the var:backupFileName - Remember we created the var

backupFilenameearlier - tar -czvf <newfile.tar.gz> <directory to archive&compress>

- Now that

toBackupis a complete list of all the files to be compressed and archived we can execute the command intobackupFilename

# [Task 12] take the file list and compress the files into the new tar ball

tar -czvf "$backupFileName" "${toBackup[@]}"Move file to another directory

- Now the file

$backupFileNamewhich is created in the current working directory - In other words the script will backup the file in the same location as the Source/Target where the source files are and then

- Move the file

backupFileNameto the destination directory located atdestAbsPath.

mv "$backupFileName" "$destAbsPath" Executable Script

Now that the script has been created. We need to make sure it is executable

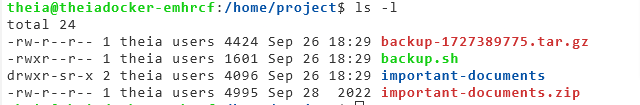

Set Permissions

- We first list the permissions and notice that only the owner has executing rights

- We need to give the user permission to execute and we do that with the

chmodcommand

# Verify permissions

$ ls -l backup.sh

-rw-r--r-- 1 theia users 1533 Sep 26 17:31 backup.sh

# set permissions

$ chmod uo+x backup.sh

$ ls -l backup.sh

-rwxr--r-x 1 theia users 1601 Sep 26 18:33 backup.shImport Test Data

- Let’s bring in some files and test the script.

Download Zip file

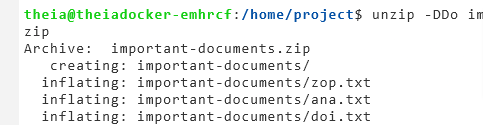

Unzip file

DDo is used to overwrite and not restore original modified date

# download file

wget https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-LX0117EN-SkillsNetwork/labs/Final%20Project/important-documents.zip

# unzip

unzip -DDo important-documents.zipLoad Package

- After loading

unzipwe’ll proceed with unzipping the zip file - Just to make sure all is well let’s use

touchto update the “last modified date” to now that way we make sure all the files will be backed up for testing purposes

# --- So let's install it using a different terminal from root directory

yashaya@YASHAYA:~$ sudo apt install unzip

# --- Now that the package is loaded let's unzip

unzip -DDo important-documents.zip

# Update last modified date to now using touch, using wildcard because they all start with important-documents/

touch important-documents/*Now the data files have been downloaded and extracted into their own folder: important-documents which will become our source/targetDirectory

Execute Script

- Now that the data has been extracted in the correct directory

- Script is written

- Permissions set

- Let’s test

backup.shby executing the script - As you see in the first line we call

./backup.shthe name of the script - followed by Source/Target directory - argument #1:

important-documents - followed by a space and the Destination directory - argument #2:

.

dos2unix

Convert from Windows to Unix

- Don’t mind this step, in my case a different editor was used and it appears that it added some Windows characters at the end of the lines which caused an error, so

- I’ll use dos2unix to convert all the Windows chars to unix and run it again

# -- Execute the script

./backup.sh important-documents .

-bash: ./backup.sh: /bin/bash^M: bad interpreter: No such file or directory

# to convert windows characters to unix

# install it firs

$ sudo apt install dos2unix

$ dos2unix backup.sh

dos2unix: converting file backup.sh to Unix format...

# -- Let's run the script again

$ ./backup.sh important-documents .

# OUTPUT

The source/target directory is important-documents

The destination directory is .

ana.txt

doi.txt

zop.txt

Backup has been completed successfully! Compressed & Archive file saved at /mnt/d/data/Linux_projects/Final_projects/C6_M4/backup-1727477641.tar.gzVerify Backup

- As you see in the output above, the backup was successful and the backup file was created

- Let’s verify by using

ls -l - And you can see the .tar-gz file with the time stamp in seconds

Copy Script to usr/local/bin

- We need to move the script file to the root directory so cron will have access to its path

- Use cp <sourceFile> <movetolocation>

- When done verify by going to usr/local/bin and ls

yashaya@YASHAYA:/mnt/d/data/Linux_projects/Final_projects/C6_M4$ sudo cp /mnt/d/data/Linux_projects/Final_projects/C6_M4/backup.sh /usr/local/bin/

[sudo] password for yashaya:

# verify it's there

yashaya@YASHAYA:/usr/local/bin$ ls

backup.shSchedule Crone Task

- Skip through the parts were cron had to be installed, this happens to be the first time I used Ubuntu in Windows so it was a new installation and most of the packages were not installed

- So we first check to see if any jobs are scheduled:

crontab -l - We see none, since we just loaded the package!

- Create a cronjob with

crontab -e - We want to have the files backed up every minute so enter the crontab for it

# --- Let's check for any existing crontab jobs!

C6_M4$ crontab -l

Command 'cronetab' not found, did you mean:

command 'crontab' from deb cron (3.0pl1-137ubuntu3)

command 'crontab' from deb bcron (0.11-9)

command 'crontab' from deb systemd-cron (1.15.18-1)

Try: sudo apt install <deb name>

# --- So let's install it using a different terminal from root directory

~$ sudo apt install cron

# --- Check it now

$ crontab -l

no crontab for yashaya

$ crontab -e

$ crontab -l

*/1 * * * * /usr/local/bin/backup.sh /mnt/d/data/Linux_projects/Final_projects/C6_M4/important-documents /mnt/d/data/Linux_projects/Final_projects/C6_M4Schedule another Cronjob

Let’s say we want to backup the files every 24 hours instead of every minute as we did above

- All we do is go into crontab -e

- Add a crontab at the end of the file, delete the other if we wish or keep it

- Add this code to backup every 24 hours

$ crontab -e

0 0 * * * /usr/local/bin/backup.sh /mnt/d/data/Linux_projects/Final_projects/C6_M4/important-documents /mnt/d/data/Linux_projects/Final_projects/C6_M4Stop Cronjob

- It has been running every minute

- To stop it use

service cron stop

Remove Cronjob

# --- To stop the cronjob

~$ service cron stop

# --- To remove the cronjob

~$ crontab -r

yashaya@YASHAYA:/mnt/d/data/Linux_projects/Final_projects/C6_M4$ crontab -l

no crontab for yashayaVirtual Environment

- If I happen to be running this on a cloud based Virtual Environment, I need to start the service manually

# because this is a virtual environment we need to start it manually

$ sudo service cron start

* Starting periodic command scheduler cron

...done.

# after verifying that they are being created stop the task

sudo service cron stopFull Script File

#!/bin/bash

# This checks if the number of arguments is correct

# If the number of arguments is incorrect ( $# != 2) print error message and exit

if [[ $# != 2 ]]

then

echo "backup.sh target_directory_name destination_directory_name"

exit

fi

# This checks if argument 1 and argument 2 are valid directory paths

if [[ ! -d $1 ]] || [[ ! -d $2 ]]

then

echo "Invalid directory path provided"

exit

fi

# [TASK 1] Set variables to command line arguments

targetDirectory=$1

destinationDirectory=$2

# [TASK 2] Display the values of the command line arguments

echo "The source/target directory is $targetDirectory"

echo "The destination directory is $destinationDirectory"

# [TASK 3] Get the current timestamp

currentTS=$(date +%s)

# [TASK 4] Create the backup file name

backupFileName="backup-$currentTS.tar.gz"

# We're going to:

# 1: Go into the target directory

# 2: Create the backup file

# 3: Move the backup file to the destination directory

# To make things easier, we will define some useful variables...

# [TASK 5] Store the absolute path of the current directory

origAbsPath=$(pwd)

# [TASK 6] Go to the destination directory and get its absolute path

cd "$destinationDirectory" # <-

destAbsPath=$(pwd)

# [TASK 7] Change to the target directory

cd "$origAbsPath" # <-

cd "$targetDirectory" # <-

# [TASK 8] Calculate the timestamp for 24 hours ago

yesterdayTS=$((currentTS -60*60*24))

declare -a toBackup # Create an array to store list of files to backup

for file in $(ls); do # [TASK 9] Loop through all the files in Target Directory

file_last_modified_date=$(date -r "$file" +%s)

# [TASK 10] Check if the file was modified within the last 24 hours

if (( file_last_modified_date > yesterdayTS )); # or if (('date -r "$file" +%s' > $yesterdayTS ));

then

toBackup+=("$file") # [TASK 11] Add the file to the toBackup array

fi

done

# [TASK 12] Create the backup file for all files modified in the last 24 hours

tar -czvf "$backupFileName" "${toBackup[@]}"

# [TASK 13] Move the backup file to the destination directory

mv "$backupFileName" "$destAbsPath"

# [Task 14] Print out a completion notice

echo "Backup has been completed successfully! Compressed & Archive file saved at $destAbsPath/$backupFileName"